Large AGI models typically require large-scale, high-performance computational resources for training and inference, which poses a significant challenge for many businesses and organizations as they need to invest a substantial amount of funds and infrastructure to support these computing demands.

Choosing the right algorithms and optimization methods is crucial for the efficiency of model training. Optimizing the training speed and reducing memory usage, decreasing the number of parameters and computational complexity, and employing strategies like distributed training can all enhance training efficiency. Additionally, customized algorithms and optimization techniques tailored to specific tasks and hardware platforms are also key to improving efficiency.

Users need to make agile resource adjustments and formulate usage strategies based on business changes to reduce the costs of training and inference while achieving higher efficiency.

Program Features

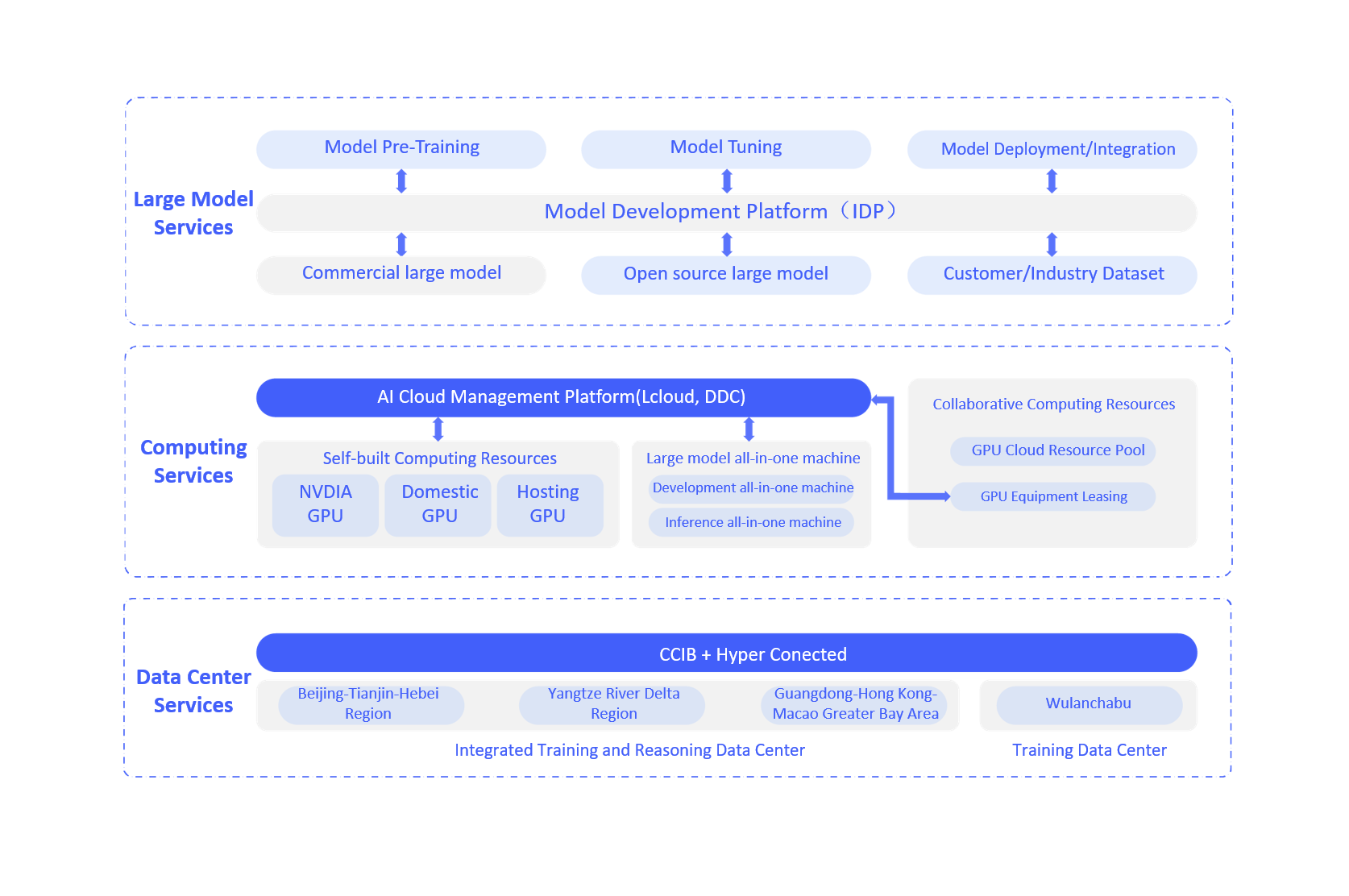

The AGI large model solution comprises three levels: infrastructure services, computational power services, and model services.

Infrastructure services include high-density server racks and an ultra-connected new computational network.

At the computational power services level, it is built on the LCloud platform, continually enriching AI-related features while adhering to a heterogeneous strategy (NVIDIA GPU + domestic GPU). It introduces integrated model development and inference machines to lower the technical barrier for GPU usage.

At the model services level, it creates the IDP model training and development platform, an integrated development environment designed specifically for AI and large model developers, serving the entire AI development process, effectively assisting data and algorithm engineers in improving efficiency.